Artificial intelligence has changed how we communicate, learn, and create—and at the heart of this transformation is ChatGPT. You’ve probably used it to write essays, generate code, brainstorm marketing ideas, or even simulate deep conversations. But have you ever stopped to wonder: How does chatgpt work? How does this AI understand our words, respond so intelligently, and sometimes even seem “human”?

In this deep-dive guide, we’ll explore how ChatGPT works—from the underlying neural network and deep learning principles to the mysterious process that allows it to perform what many call “deep research.” Let’s uncover the magic behind OpenAI’s most famous AI model.

What Is ChatGPT?

To understand how ChatGPT works, we first need to answer a simpler question: what is ChatGPT?

ChatGPT is an AI chatbot created by OpenAI, designed to generate human-like text in response to natural language input. The “GPT” in its name stands for Generative Pre-trained Transformer—a type of machine learning model based on the Transformer architecture invented by Google researchers in 2017.

This architecture revolutionized AI because it allowed models to handle words not in isolation, but in context. Instead of reading sentences word by word like a machine, the Transformer understands relationships between words, phrases, and meanings across an entire paragraph.

That’s why ChatGPT feels conversational. It doesn’t just respond to keywords—it understands tone, intent, and structure.

You may be interested AI Chat Pro: The Smart AI Assistant

How Does ChatGPT Work?

At its core, ChatGPT works like a massive prediction engine. When you type something like “Write me a story about a dragon,” the model doesn’t “know” what a dragon is in the human sense—it predicts, based on billions of examples, what words are most likely to come next.

Let’s break it down step by step:

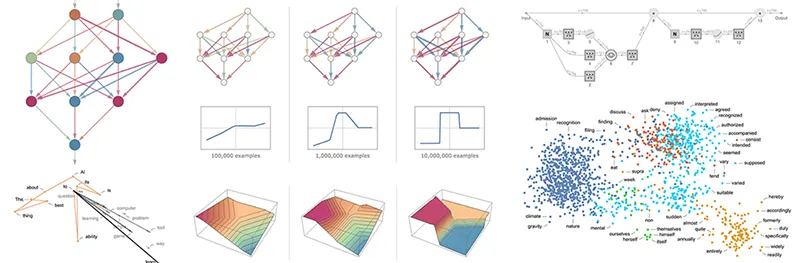

Training on Massive Text Data

Before ChatGPT could talk to you, it was trained on a huge dataset of text from books, websites, research papers, and conversations. This process, called pretraining, helps the model learn grammar, facts about the world, reasoning patterns, and even styles of writing.

During training, the model sees a sentence like:

“The cat sat on the ___.”

It learns to predict the next word (“mat”) by calculating probabilities based on patterns it has seen across millions of similar sentences. Multiply this process billions of times, and ChatGPT becomes capable of generating language that feels astonishingly natural.

The Transformer Architecture

So, how does ChatGPT work inside its brain? It all happens within the Transformer—a network of layers designed to focus on different parts of a sentence simultaneously.

Each layer uses a mechanism called “attention”, which helps the model figure out which words are important in understanding meaning. For example, in the sentence:

“The dog that chased the cat was fast.”

The word “was” refers to “dog,” not “cat.” Transformers handle this by assigning attention weights—a mathematical way of saying: “this word relates more to that word.”

This multi-layered attention system is what allows ChatGPT to maintain context over long paragraphs, answer follow-up questions, and generate coherent essays.

You may be interested The Best ChatGPT Alternatives in 2025

Fine-Tuning and Reinforcement Learning

Once the base model (like GPT-3 or GPT-4) is pretrained, it goes through fine-tuning. OpenAI refines the model using curated datasets and human feedback to align it with user expectations.

This process is called Reinforcement Learning from Human Feedback (RLHF). Human reviewers rate the quality of model outputs, and those ratings are used to teach the model what’s considered a “good” or “helpful” response.

That’s why ChatGPT doesn’t just spew random text—it tries to be helpful, polite, and accurate (most of the time).

The Secret Sauce: Probabilities and Tokens

When you ask ChatGPT a question, it doesn’t pull information from a database—it generates text from scratch, one token at a time.

A token is a chunk of text—sometimes a word, sometimes part of one. For example:

- “ChatGPT” might be split into “Chat” and “GPT.”

- “Amazing” might be one token.

For every token, the model calculates the probability of all possible next tokens and chooses the most likely sequence.

So, when you ask:

“Explain how does ChatGPT works.”

It analyzes your input, understands your intent, and predicts a logical response. This prediction happens billions of times per second, guided by complex math and statistical reasoning.

How Does ChatGPT Deep Research Work?

A popular question is: how does ChatGPT deep research work? After all, it seems to know everything—from historical facts to technical programming concepts.

Here’s the truth: ChatGPT doesn’t actually research the internet in real-time (unless connected to tools like ChatGPT with browsing). Instead, its responses come from patterns learned during training.

However, its deep reasoning capabilities—often mistaken for “deep research”—come from the sheer size of its neural network and the diversity of data it was trained on. When given a complex question, ChatGPT draws on associations across millions of examples to synthesize an answer that feels researched.

Think of it as an “instant researcher” that can connect ideas from across vast domains—without actually Googling anything.

You may be interested Why is chat gpt not working? Common Issues and Fixes

Why Does ChatGPT Sound So Human?

The secret lies in context and style modeling. ChatGPT doesn’t just predict words—it predicts tone, emotion, and structure.

For example, when you say:

“Write a heartfelt apology,”

the model has learned from countless examples of apologies. It understands the rhythm of emotional writing—using softer words, empathetic phrasing, and gentle transitions.

This makes ChatGPT not just a factual engine, but a creative companion capable of mimicking human expression.

The Role of Tokens, Context, and Memory

Another important factor in how ChatGPT works is context length. The model can only “remember” a certain number of tokens from your conversation—known as the context window.

Early versions like GPT-3 could handle around 2,000 tokens (roughly a few pages of text). Modern models like GPT-4-turbo can process over 100,000 tokens, allowing for much deeper conversations, document analysis, and code generation.

This long memory is what enables features like deep research, where ChatGPT can analyze entire articles, cross-reference ideas, and maintain context across large inputs.

How ChatGPT Improves Over Time

Each version of ChatGPT—GPT-3, GPT-3.5, GPT-4, and beyond—builds upon the same fundamental architecture but with more data, parameters, and refined training.

The number of parameters (connections between artificial neurons) determines the model’s intelligence. For example:

- GPT-2: ~1.5 billion parameters

- GPT-3: ~175 billion

- GPT-4: estimated to be in the trillions

More parameters mean the model can understand subtler patterns, make nuanced judgments, and respond more creatively.

You may be interested An ai overview is not available for this search

Applications of ChatGPT

Understanding how ChatGPT works also helps us appreciate its applications:

- Education: Tutoring, essay feedback, language learning.

- Business: Customer service, marketing copy, brainstorming.

- Coding: Debugging, explaining algorithms, writing scripts.

- Research: Summarizing papers, generating outlines, synthesizing ideas.

In each case, the same prediction mechanism powers entirely different types of reasoning—whether emotional, logical, or creative.

ChatGPT vs. Search Engines

A common confusion arises around ChatGPT deep research versus traditional search. Unlike Google, which retrieves documents, ChatGPT generates answers.

If you ask Google:

“How does ChatGPT work?”

you get links to articles.

If you ask ChatGPT the same question, you get a synthesized explanation based on thousands of sources it has “absorbed” during training. It’s not fetching data—it’s predicting what a correct answer should sound like.

Limitations of ChatGPT

Even though ChatGPT seems brilliant, it has limits.

- It doesn’t have real-time knowledge (unless browsing is enabled).

- It can make hallucinations—confidently incorrect statements.

- It lacks true understanding—it predicts language, not meaning.

However, continuous fine-tuning and hybrid systems (like ChatGPT connected to web search or internal databases) are closing this gap rapidly.

You may be interested List of free llm models to use unlimited

So, how does ChatGPT work? It’s the art of prediction turned into intelligence. By learning from massive datasets, applying the Transformer architecture, and refining its responses through human feedback, ChatGPT can understand, generate, and interact in ways that feel almost alive. While it doesn’t “think” like us, it mirrors our patterns of language and reasoning so closely that it bridges the gap between human thought and machine learning. And as AI continues to evolve, ChatGPT’s deep research capabilities will only grow stronger—transforming how we learn, create, and communicate in the digital age.

A user-friendly AI platform that’s easy to deploy, helping SMEs automate processes, boost efficiency, and connect with customers naturally.

LIKEFLOW – Smarter AI, Faster Growth

- Phone : +1(719) 313 7576

- Email : support@likeflow.ai

- Address : 1942 Broadway St. STE 314C Boulder CO 80302

Leave a Reply